Part 2 - Exploring OpenAI Agent SDK with Local Models (Ollama)

Recap

Welcome back! If you don’t get why I’m welcoming you back, you should go and read Part 1 of this series.

So, continuing our journey, now its time to bring in more agents in to the mix and see how the SDK facilitates multi agent coordination.

Multi Agents

Creating more than 1 agent, is pretty simple as creating multiple instances of Agent class. Lets see some examples

import asyncio

import json

1from util.ollamaProvider import OllamaProviderAsync

from agents import (

Agent,

ModelSettings,

RunResult,

Runner,

function_tool,

set_tracing_disabled,

)

set_tracing_disabled(disabled=True)

accounts_agent = Agent(

2 name = "Accounts Agent",

3 handoff_description="Specialist agent for customer accounts",

4 instructions="You provide assistance with customer accounts. Answer questions about customer accounts.",

5 model = OllamaProviderAsync().get_model(),

)- 1

-

As you remember in part-1, we learnt how to create a

ModelProviderinstance to return aOpenAIChatCompletionsModelmade out of Ollama. I simply introduced this piece as a sub module, so that I can use it for all of my further explorations. - 2

-

We now create our first agent with

nameAccounts Agent. - 3

-

We fill in the

handoff_descriptionwhich will be used by some other higher level agent (we will see this later) to decide whether or not to hand off the control this agent. - 4

-

Then, we provide some system instruction via

instructions. - 5

-

Finally, we set the

modelto theOllamaProviderAsync().get_model()to force the agent to use our local model

Please note that, even though OpenAI agent SDK does provide the sync version of the run() method i.e Runner.run_sync(), unfortunately it is not available for non-OpenAI models. This is because, OpenAIChatCompletionsModel contains only the AsyncOpenAI client instead of OpenAI client (which is the sync version), as evident here

Great! now lets create few other agents and see how they can communicate to each other.

1credit_card_agent = Agent(

name = "Credit Card Agent",

handoff_description="Specialist agent for customer credit cards",

instructions="You provide assistance with customer credit cards. Answer questions about customer credit cards.",

model = OllamaProviderAsync().get_model(),

)

4@function_tool

def get_wire_transfer_status(order_id: str):

"""

Retrieve the status of a wire transfer for a given order ID.

Args:

order_id (str): The unique identifier of the order.

Returns:

str: A message indicating the wire transfer status for the specified order ID.

"""

print(f"[debug] Getting wire transfer status")

return f"Wire transfer status for {order_id}: Completed"

wire_transfer_agent = Agent(

2 name = "Wire Transfer Agent",

handoff_description="Specialist agent for customer wire transfers",

instructions="You provide assistance with customer wire transfers. Answer questions about customer wire transfers.",

model = OllamaProviderAsync().get_model(),

3 tools=[get_wire_transfer_status],

)

main_agent = Agent(

5 name = "Operator Agent",

instructions="You determine which agent to use based on the user's question.",

model = OllamaProviderAsync().get_model(),

handoffs=[accounts_agent, credit_card_agent, wire_transfer_agent],

)- 1

-

We create a

credit_card_agentwith its relevanthandoff_descriptionandinstructions - 2

-

We then create a

wire_transfer_agentagent with its relevanthandoff_descriptionandinstructions - 3

-

For the

wire_transfer_agent, we also provide access toget_wire_transfer_statustool, - 4

-

Which is, nothing but a functional call to return a dummy status, decorated with

function_tooldecorator. The function name and doc strings of the function is sufficient for the agent to select this tool if warranted. - 5

-

Finally, we create the

main_agentaka routing agent called Operator Agent and set thehandoffsto a list of allowed sub agents.

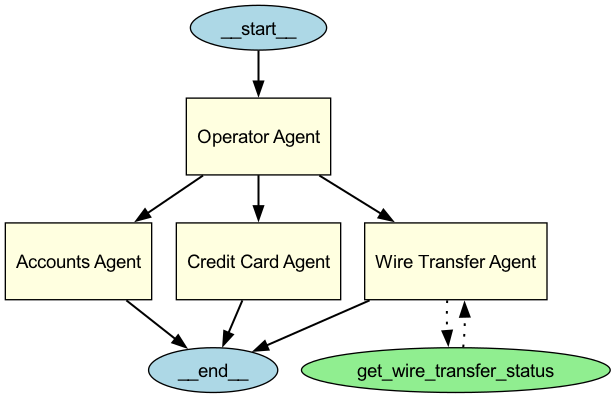

Here is a graphical view of the orchestration we made so far:

Then, we simply feed the main_agent with the user’s input to see the routing happening. Atleast that’s what the OpenAI documentation claims 😳 ⁉️⚠️. But if you run the below code…

async def main():

result: RunResult = await Runner.run(main_agent, "What is the status of my wire transfer with id 1234")

print(result.final_output)

asyncio.run(main())you will end up with…

{"type":"function\",

\"name\":\"transfer_to_wire_transfer_agent\",

\"parameters\":{}}

Well, What happened ??

Well, OpenAI’s documentation is not that clear. It took me ~30 mins to figure out that, handoffs doesn’t automatically hand it off to the right agent. It simply sets the probable next agent in the result.last_agent property.

In other words, OpenAI agent SDK, simply sets the next agent to be called, according to the main_agent’s LLM output. i.e main_agent has determined which agent the control should be handed off to. It is not handed off yet. To do that, you need to call the Runner.run() on the next agent. So the modified code would be :

async def main():

result: RunResult = await Runner.run(main_agent, user_input)

user_input = "What is the status of my wire transfer with id 1234"

1 if result and result.last_agent in [accounts_agent, credit_card_agent, wire_transfer_agent]:

print(f'[debug] Handoff to {result.last_agent.name}')

2 sub_result: RunResult = await Runner.run(result.last_agent, user_input)

3 print(f'Final Output => {sub_result.final_output}')

asyncio.run(main())- 1

-

We simply inspect the value of

last_agentfrom theresultand if it one of the available hand offs agent list, - 2

-

We call the

run()of thatlast_agentand pass theuser_inputagain. - 3

-

Finally, we print the

final_outputofsub_result.

Here the final complete example with everything we learnt so far

async def main():

print("Enter your question (or 'exit' to quit): ")

while True:

user_input = input("User => ")

if user_input.lower() == 'exit':

break

result: RunResult = await Runner.run(main_agent, user_input)

if result and result.last_agent in [accounts_agent, credit_card_agent, wire_transfer_agent]:

print(f'[debug] Handoff to {result.last_agent.name}')

sub_result: RunResult = await Runner.run(result.last_agent, user_input)

print(f"Assistant [{result.last_agent.name}] => {sub_result.final_output}")

elif result.final_output:

print(f"Assistant [{result.last_agent.name}] => {result.final_output}")

asyncio.run(main())With the above code, we see the output as :

Enter your question (or 'exit' to quit):

User => What is the status of my wire transfer with id 1234

[debug] Handoff to Wire Transfer Agent

[debug] Getting wire transfer status

Assistant [Wire Transfer Agent] => The current status of your wire transfer with ID 1234 is "Completed". This means that the transfer has been successfully processed and the funds have been transferred to the intended recipient. If you have any further questions or concerns, please don't hesitate to ask.

User => Tool response as final response

Now, consider a case where you don’t require LLM to use the tool response (get_wire_transfer_status in case of wire_transfer_agent) to prepare the final answer, instead just return the tool response as the final answer? Well, agent-SDK has made it very simpler!

wire_transfer_agent = Agent(

name = "Wire Transfer Agent",

handoff_description="Specialist agent for customer wire transfers",

instructions="You provide assistance with customer wire transfers. Answer questions about customer wire transfers.",

model = OllamaProviderAsync().get_model(),

tools=[get_wire_transfer_status],

1 tool_use_behavior="stop_on_first_tool"

)- 1

-

Simply set the

tool_use_behavior="stop_on_first_tool"on the respective agent.

This would change the output as follows :

Enter your question (or 'exit' to quit):

User => What is the status of my wire transfer with id 1234

[debug] Handoff to Wire Transfer Agent

[debug] Getting wire transfer status

Assistant [Wire Transfer Agent] => The wire transfer with ID 1234 has been completed.Routing back to main_agent

Now, what if the query doesn’t pertain to any sub agent and you want the main agent to answer it? Well this is working only incase of thinking models. With qwen3 model for the below :

main_agent = Agent(

name = "Operator Agent",

instructions="You determine which agent to use based on the user's question. If the question is about customer accounts, credit cards, or wire transfers, hand off to the appropriate agent. Otherwise, answer the question yourself.",

model = OllamaProviderAsync().get_model(),

handoffs=[accounts_agent, credit_card_agent, wire_transfer_agent],

)For an input like Who is the president of USA?, I’m seeing :

Enter your question (or 'exit' to quit):

User => who is the president of USA?

Assistant [Operator Agent] => <think>

Okay, the user is asking who the president of the USA is. Let me think. First, I need to check if this question falls under any of the categories that require handing off to a specialist agent. The tools provided are for customer accounts, credit cards, and wire transfers. The question is about the US president, which is a general knowledge question, not related to any of the specified financial services. Therefore, I don't need to use any of the transfer functions. I should answer directly. The current president as of 2023 is Joe Biden. So, I'll provide that information.

</think>

The current President of the United States is Joe Biden. He has been in office since January 2021.But, if I use normal LLMs like llama3.2 etc, I’m seeing :

User => Who is the president of USA?

Assistant [Operator Agent] => {"type":"function","function":"lookupExternalAgent","parameters\":{\"name\":\"president_of_USA\"}}As you can see, the agent is making up a tool to lookup this fact, even though no such tool is listed in the main_agent1

Wrapping up

Great! So in this post, I covered how to use OpenAI agent-sdk’s handoffs to orchestrate multi-agent collaboration. By and large the SDK is very intuitive and works as advertised with some teething issues that I covered. Given that OpenAI is the pioneer in not only introducing Chat-GPT to masses, but also the first one to standardize API inferencing, which forced almost every other model provider to embrace, adopt OpenAI API spec, I hope they will do everything in their power to make this SDK more robust.

Coming back to my exploration, I still consider my feet only wet, with deep dives in upcoming posts. I would say, stay-tuned, but we are not in analog world anymore, So I’m gonna say, stay subscribed and catch you again on my Part 3 post, very soon!

I also publish a newsletter where I share my techo adventures in the intersection of Telecom, AI/ML, SW Engineering and Distributed systems. If you like getting my post delivered directly to your inbox whenever I publish, then consider subscribing to my substack.

I pinky promise 🤙🏻 . I won’t sell your emails!

Footnotes

I have logged this issue in OpenAI’s Github.↩︎