🗞️ TSpec-LLM

https://arxiv.org/abs/2406.01768

What is this paper about ?

This paper explains about a dataset named TSpec-LLM which is comprised of 3GPP specifications from Release 8 to Release 19 (1999–2023). Although the title of the dataset contains the word LLM on it, in reality this is just a dataset that can be used to either finetune an existing LLM or employ retrieval augmentation technique (aka RAG) over a LLM of choice. This paper doesn’t propose a new machine learning model or architecture specifically for telecom, but simply propose a dataset, along with their experimentation results on using these datasets over some of the start of the art LLMs like OpenAI, Gemini, Gemini Pro and Mistral Mixtral LLMs.

The papers starts by talking about prior works on how research has been conducted to understand the relevance & usage of LLM technology in the field of telecom. One of the important prior work which has been quoted & compared is TeleQnA[1]. This paper argues that in [1], the dataset is created by converting / normalizing the original text into some standardized format, thereby losing structural information such as equations, tables, normative references etc. As an enhancement, this paper proposes that it preserves the 3GPP specification structure as seen on DOC format and then by converting it to corresponding markdown files (MD) format, it being fed to the state of the art LLMs to perform inference.

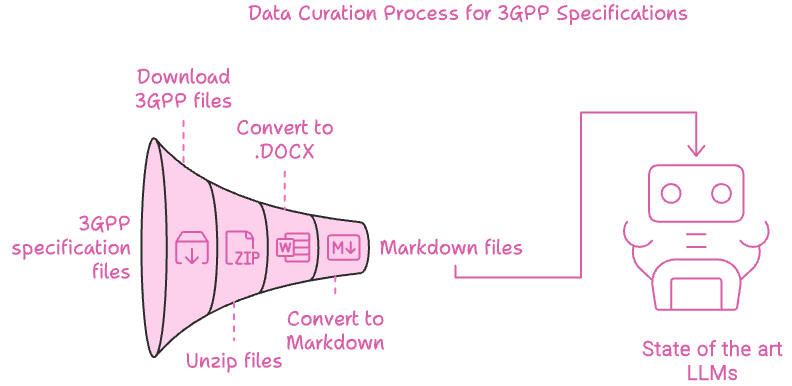

Authors of this paper also clearly explains the process that they encountered to curate this dataset. They start by using a python utility named download_3gpp [2] which automates the process of downloading zipped 3GPP specification files by release versions. They then process those zipped files and place the unzipped versions within its own release folders. The next 2 steps are the ones that really interested me.

They then convert all the .DOC files to .DOCX format using headless openLibre software. Once all the available specifications are in .DOC format, all of these are further fed to pandoc utility to convert the .DOCX to .md files. This vastly simplifies the operation of preserving the original structure of 3GPP specification into something that is readily consumable by the state of the art LLMs. Figure 1

Once the dataset is prepared, the authors also test the effectiveness & accuracy of this dataset by creating a set of questionnaires with varying difficulties (easy, medium, complex) and test the state of the art LLMs using this dataset. While this step can be done in manual way, they took it for a spin by using LLM here as well. They basically tasked one LLM to curate set of multiple choice questions on these difficulty levels and then tasked another LLM to verify the questions generated by the first LLM. Based on second LLMs response, they have taken the conflicting ones (i.e if the 1st LLM state a question as complex, but the second LLM disagrees etc) and ran through human evaluation to finalize those questions. Finally they took those questions and quizzed these LLMs and recorded the accuracy.

Here again, they first fed the questions directly to these base LLMs and recorded their accuracy. Later they augmented these LLMs with RAG technique using llama_index [3] and then reran the quizzes with RAG in picture.

Finally they present some numbers on the sheer volume of files they have opensourced / contributed as part of this dataset comparing to [1]. The accuracy numbers based on non-RAG vs RAG approach and how the models performed etc. Naturally the quality of response would increase incase of RAG since the model has some backing data from the dataset. They claim the model accuracy was improved to as much as 75% using RAG which is fair and understandable. They do acknowledge the shortcomings in this dataset and cited future works to improve the same using local models for better privacy.

What are the key contributions of this paper ?

Dataset

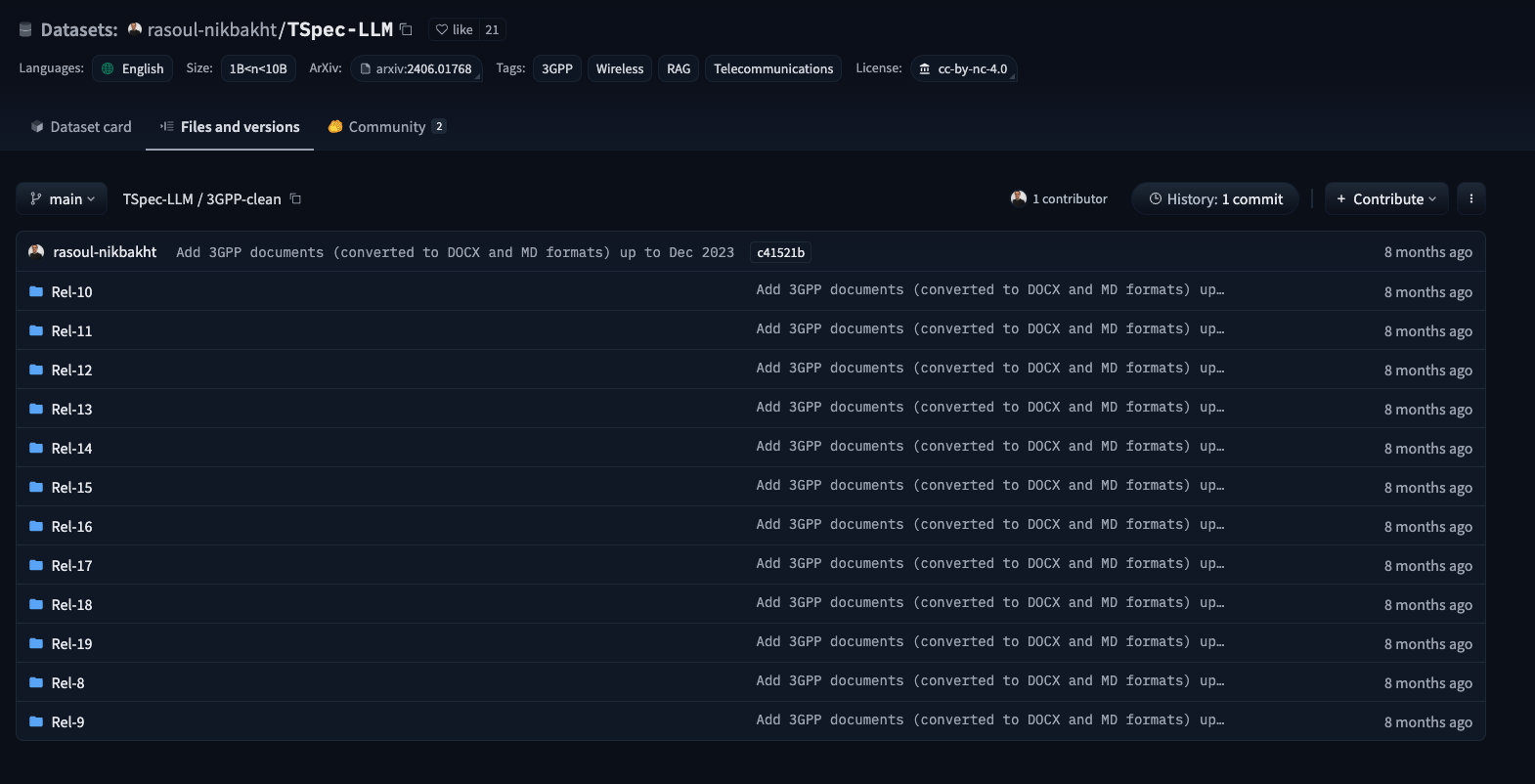

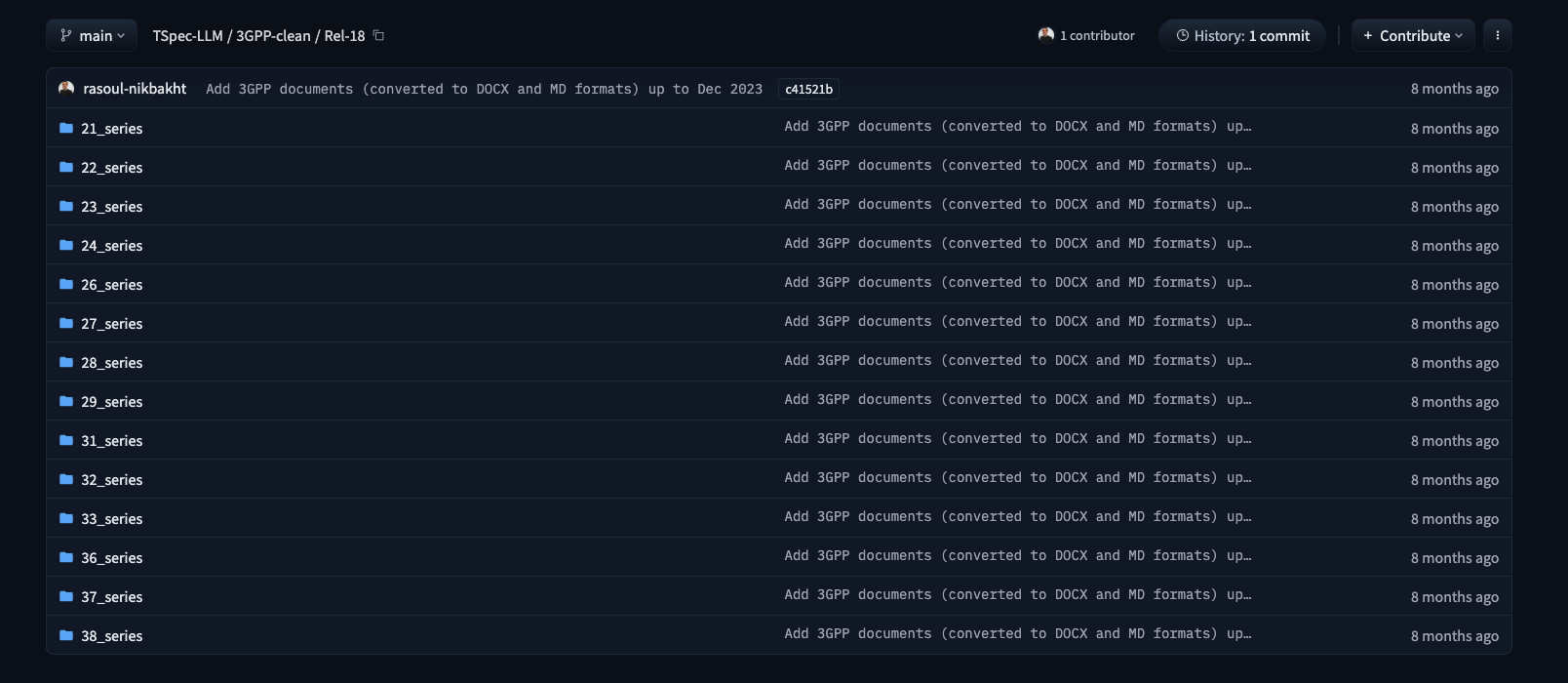

As the title of the paper suggests, one of the key contribution of this paper is the dataset itself. Spanning over 15GB in size, this dataset, which is hosted in huggingface repo, consists of all possible 3GPP specification documents under Release 8 to Release 19 (refer Figure 2). Under each Release folder, all possible specifications are listed as a folder (refer Figure 3). Under each specifications folder, all possible documents are listed in both .DOCX and .MD format (refer Figure 4)

Pre / Post Processing Python scripts

process-3GPP.ipynbnotebook explains the usage of [2] to download the 3GPP specifications and how to use OpenLibre & Pandoc to process the filesquestion_generation_prompt.pyexplains how they constructed those questionnaires using basic prompt engineering techniques.

Sample generated questionnaire questions

Sampled_3GPP_TR_Questions.jsonshows some of the samples of those generated questions.

What are my key takeaways ?

I learnt that there is a tool [2] that specifically exists to download 3GPP specifications effortlessly and I can certainly make use of it in my workflows. Also this dataset performs a pretty good job of converting the information present in the doc such as Tables, Equations, Code chunks etc into markdown format, which today’s LLMs can easily consume without much noise. Also I learnt that pandoc can be reliably used to convert to Markdown format. Although I’m not sure about the dependency with .DOCX format. Perhaps .DOCX to .MD is more stable than other formats so the authors resorted to that.

While I appreciate the contribution made by this paper, I do notice that certain aspects of the 3GPP document such as UML sequence diagrams, Class diagrams, Relationship diagrams etc are either available as shapes or images within the document but during conversion to markdown, that information lost (refer Figure 5).

Perhaps using a multi-modal LLM like GPT-4o or LLava can help mitigate those ?

I also publish a newsletter where I share my techo adventures in the intersection of Telecom, AI/ML, SW Engineering and Distributed systems. If you like getting my post delivered directly to your inbox whenever I publish, then consider subscribing to my substack.

I pinky promise 🤙🏻 . I won’t sell your emails!