Equipping PyOMlx with OpenAI Compatibility

Its the year-end break and I got some quiet time. While people normally go off to meet their loved ones across the globe, I got holed in my home, witnessing the first white Christmas.

With lots of free time at hand, I decided to check off one of the long pending item in my wishlist.

When I started PyOMlx, I maintained a roadmap.md file. Within that file, one of the item that was listed was complying to OpenAI chat completions endpoint.

While we can debate all day about the Openness of OpenAI, the indisputable fact is that OpenAI standardized the remote API integration and they made their clients opensource. This inturn helped many other model providers to quickly embrace this chat completions endpoint as a pseudo standard.

But Why?

So the natural question is Why?. Well the answer is simplicity. Thinking from the consumer point of view, if the consumer wants to simply compare & contrast multiple model’s output, having this common interface makes sense. Also from flexibility & drop-in replacement point of view, this is an additional unique selling point from the provider perspective. Infact many early LLM provider (during those days!) advertised heavily on this aspect. Then slowly all major opensource projects too started embracing OpenAI’s endpoints1.

Hence I naturally wanted to get on that bandwagon too, especially during the time where no popular server implementation was available for MLX models. Even now, I don’t see any direct contenders for PyOMlx.

Howdy neighbors 👋🏻

One of the reason why I started PyOMlx is basically to mimic Ollama like easy model serving experience, but for MLX models. MLX was nascent and was developing fast. I had always been a apple fanboy and naturally I had apple hardware and was anyways GPU poor. So embracing MLX was very natural for me. When I spotted this opportunity, I capitalized it.

Fast-forward 11 months (I debuted PyOMlx during Feb ’24), lots of development happened in this space.

- LM Studio started natively supporting MLX models

- MLX LM (the native project within Apple MLX) also provided

mlx_lm.servermodule - MLX Server - a new project surfaced that provided a standalone implementation of the http server endpoints

Call me biased, but none of the above replaces PyOMlx in my opinion.

Reasons

- LM Studio is more of a desktop app (maybe contending against MindMac and the likes {including PyOllaMx}) and not a http frontend over serving MLX models

- MLX Server doesn’t comply to OpenAI chat completions endpoints thereby requiring concrete client implementations.

- MLX LM is hosting the models as 1:1 setting. Say you have 10 models and you need client to invoke any of those 10 models, you would running 10

mlx_lm.servercommand with each server serving exactly one MLX model.

PyOMlx on the other hand is mimicking Ollama where depending on the model that is being requested, PyOMlx will load that model in memory and serve the response over http. This way irrespective of number of models, there is exactly one http server serving all models on demand.

v0.1.0 Updates

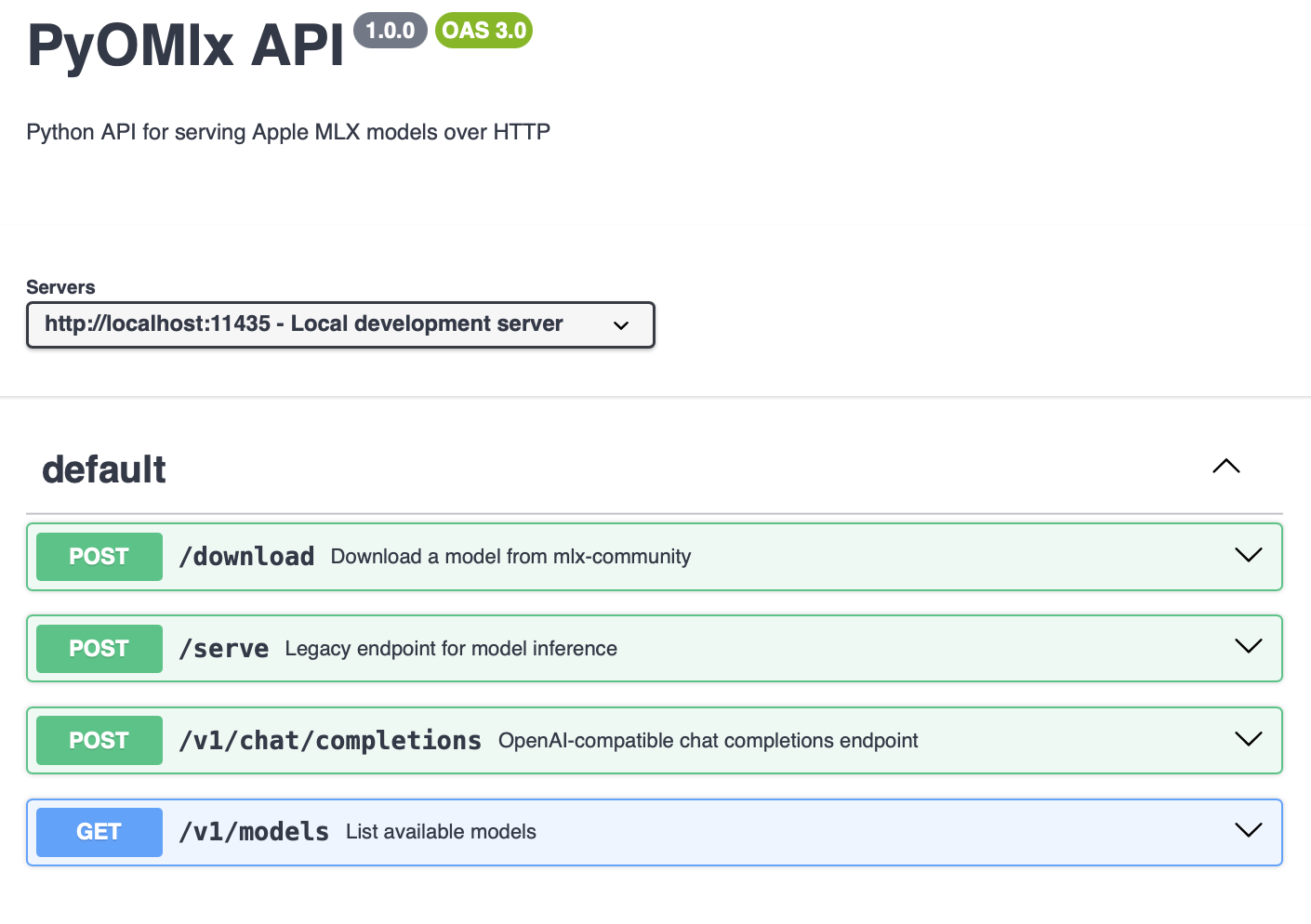

- Added OpenAI API Compatible chat completions and list models endpoint.

/v1/chat/completions/v1/models

- Added

/downloadendpoint to download MLX models directly from HuggingFace Hub. All models will be downloaded from MLX Community in HF Hub. - Added

/swagger.jsonendpoint to serve OpenAPI Spec of all endpoints available with PyOMlx

So what?

Well, if you still ask so what, then I’m not gonna explain anything in writing. Let me show the damn code 😁

# pip install openai

from openai import OpenAI

client = OpenAI(base_url='http://127.0.0.1:11435/v1', api_key='pyomlx')

response = client.chat.completions.create(model="mlx-community/Phi-3-mini-4k-instruct-4bit",

messages=[{'role':'user', 'content':'how are you?'}])

print(response.choices[0].message.content)The port I have selected to bind PyOMlx is 11435. Do you know why? 😉

I also publish a newsletter where I share my techo adventures in the intersection of Telecom, AI/ML, SW Engineering and Distributed systems. If you like getting my post delivered directly to your inbox whenever I publish, then consider subscribing to my substack.

I pinky promise 🤙🏻 . I won’t sell your emails!

Footnotes

For eg: Ollama, HuggingFace started offering this support↩︎