Introducing my pet projects : pyOllaMx and pyOMlx

Oh my Bing AI!

Ever since Chat-GPT was introduced, my dependence on Google search started to decrease. Although I was using Chat-GPT to get direct answers to some of how-tos and explain-this-that questions, my reliance on Google Search wasn’t that affected. That’s because Chat-GPT (at that time) was limited to pre Nov 2021 era.

However the introduction of Bing AI changed the game for me. From that point on, I was more or less an Edge person 💁🏻♂️. I even went at a point where I ported all my bookmarks, shortcuts and even password managers to Edge browser. Never in my life I thought I could use a Internet Explorer family of browser 🤭. I was beginning to drink the Microsoft Kool-aid 😵💫 and then…. this happened… 🤦🏻

That’s when I realized how much handicapped & addicted I was to this chat based search assistants. Trust me it felt extremely weird and cumbersome to go back to Google search (yeah no other way) to even understand why I was getting above error. For a few days, I was quite restless and missed chatting with Bing to get some direct answers instead of looking at some list of blue links to go and chase the answer.

There is an AI for that…

Having convinced myself I need to have a better alternative (or atleast a backup plan) to both Google and Bing, I turned to other options and explored some of them. I did explore..

- Perplexity - Honestly, IMO not worth the hype. Sure it does provide direct answers, but generic websearch isn’t upto the mark and defnitely not worth $$ for the pro version. Plus why do I need to login to search? 🤷🏻♂️

- Bard - Functionally it works, but again it is tied to my google account and my reliance on Google as well, which I wanted to decrease. Plus Oh the sheer amount of tracking 🙅🏻♂️ is nuts

- Hugging Chat - Honestly, very close to what I wanted. Guest mode, Opensource, new shiny models.. but.. but.. 8/10 times it was either slow or not working. I was at mercy of GPUs being relinquished by HF Pro users and most of the time (atleast for me), it was not very usable, hence not useful.

There is an old saying that, if all the doors are closed, inner door will open. For me the inner door opened within my macbook. I stumbled upon Ollama.

Open Ollama sesame…1

Ollama Project made it very trivial to load most popular opensource LLMs within a macbook. It also serves the said model over the network and offers both python and JS SDKs and even have a LLM client implementation in Langchain.

Ollama is pretty active in updating their models and their Modelfile ecosystem made it super easy to port any available GGUF / Llama2 model in huggingface to Ollama and serve within the Ollama MacOS App. Cherry on the cake was, Ollama CLI commands and Modelfile mimicked Docker commands to the extent (ollama pull, push, run, remove etc) and it made the user experience very very addictive and attractive.

I played with multiple models on the command line to get a feel of what it would offer and the result was mind blowing. Sure it cannot search the web (as BingAI would do), nevertheless the whole UX of running a decently powered LLM offline, on-device and super private was a game changer to me. Added with the power of python to hook the DuckDuckGO search engine to the LLM to perform RAG, sealed the deal for me. I was sold the prospect of hunting Ollama clients instead of chat based assistants…

Just when you thought it’s over, it isn’t

My hunt for Ollama UI was pretty interesting. I explored..

- Ollamac - A SwiftUI based native MacOS app for Ollama. It is pretty basic and decent, but it lacked all the bells and whistles like chat history, image/music support etc. Plus I’d have to learn SwiftUI inorder for me to extend it. It is on my active ToDo list

- LLM Studio - Pretty neat and loaded app. But the license was not straight forward. They say it is free for personal use, but their terms aren’t encouraging. Also it appears to support Llama and GPT based models, but no support MLX

- MindMac - Fully featured with support on both paid and opensource LLMs, but the app itself is a paid app

Needless to say, I did explore streamlit & gradio and I had so much fun developing many ML applications2 .

While these apps were great, super easy to begin your journey into ML applications, atleast to me, it didn’t fulfil the feel for native MacOS apps. That’s when I decided to take the plunge into custom app development for my own needs.

One more thing…

Before I dvelve deep into my pet projects, I also want to talk about MlX project. MlX is Apple’s new array framework for Apple silicon devices. Ever since I started learning PyTorch and other ML frameworks, I always wined about not having having to utlize to.('cuda') in my notebooks locally. Though there is metal framework from Apple that abstracts much of this nuances, I was looking for more torch like library, but that can worl natively in Apple Silicon. Last December 2023, Apple unvieled this new project and it just blew me away.

Especially the examples shared inside mlx-examples repo were mind blowing. One can locally try image generation and text-to-speech transcription on-device, 100% offline.

Hence having tasted this blood for local, on-device user experience, I was determined that my pet project should be capable of dealing both Ollama and MlX models and it paved way to PyOllaMx and PyOMlx projects.

Enough talk, show code!

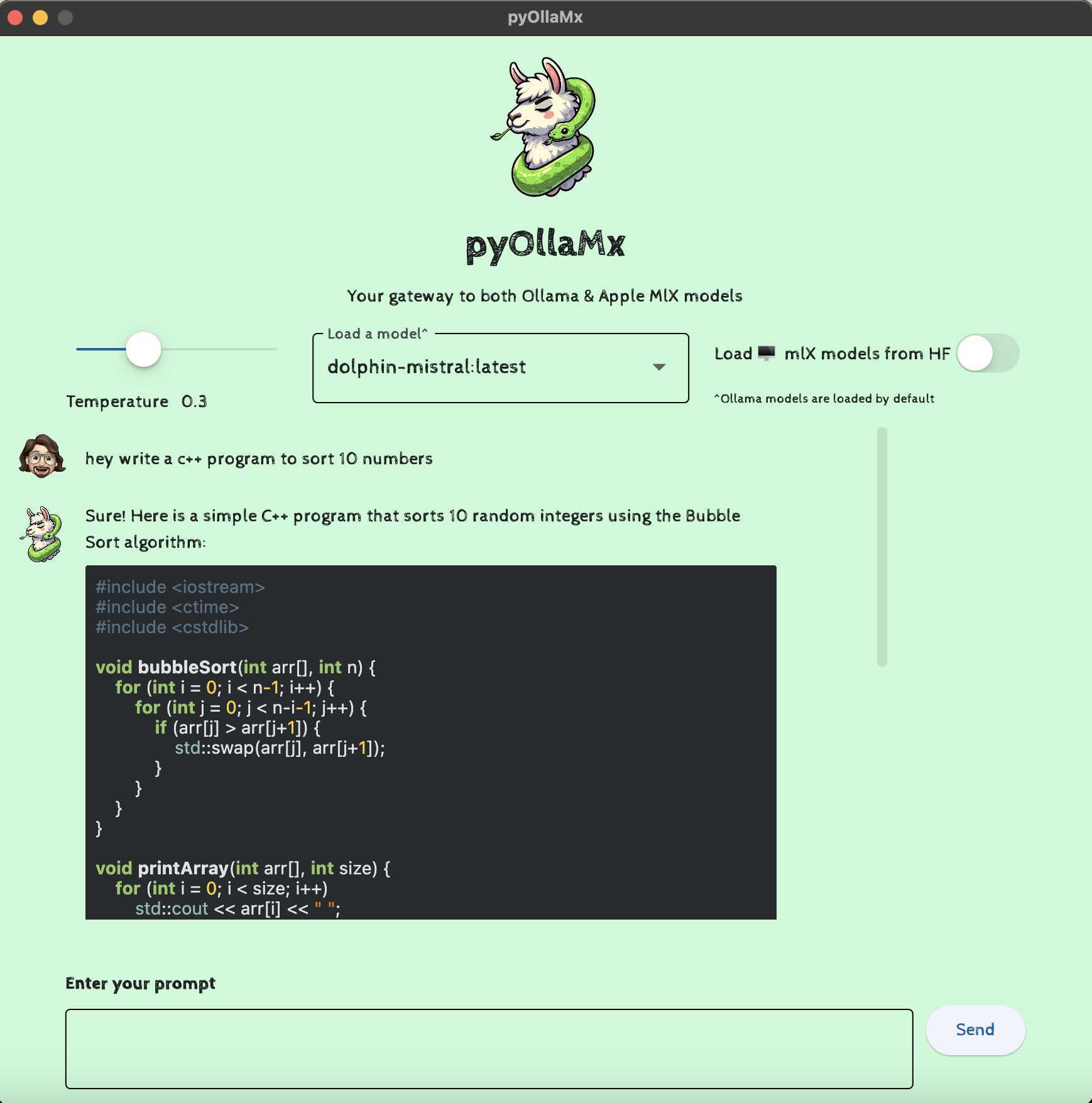

PyOllaMx - Your gateway to both Ollama & Apple MlX models

PyOllaMx is ChatBot MacOS application capable of chatting with both Ollama and Apple MlX models. For this app to function, it needs both Ollama & PyOMlx macos app running locally (more on this below). These 2 apps will serve their respective models on localhost for PyOllaMx to chat.

One of the beauty of this app is, I extended Langchain’s custom LLM to support PyOMlx along with using Ollama’s official LLM class in Langchain3. So from the prompt usage point of view, I don’t have to change anything, but still I could dynamically switch the LLMs as per user input. This provided the flexibility of choosing both LLaMa2 and MlX models on demand by the user.

In its first release, PyOllaMx supports following features:

- Auto discover Ollama & MlX models. Simply download the models as you do with respective tools and pyOllaMx would discover the models seamlessly for you to pick and choose

- Markdown support on chat messages for programming code

- Selectable Text

- Temperature control

While currently this app is very basic, I’m intending to build all those bells & whistles that is found in other commercial apps. This serves me following purpose:

- It is super fun to flex my developer and product manager muscles every now & then and hand craft the product with love and care

- Cost effective, makes me stay relevant to current trends and not become obsolete

- My app(s) could also benefit others who are also on lookout like me, valuing privacy and control.

If by any chance, you find this app interesting, feel free to use it and (you may) contribute to this project as well. Please ⭐️ this repo to show your support and make my day!

I’m planning on work on next items on this roadmap.md. Feel free to comment your thoughts (if any) and influence my work (if interested)

Lastly, MacOS DMGs are available in Releases

Now lets move on its sister project, an enabler for serving MlX models : PyOMlx

PyOMLx - A wannabe Ollama equivalent for Apple MlX models

PyOMlx : A macOS App, capable of discovering, loading & serving Apple MlX models downloaded from Apple MLX Community repo in hugging face 🤗.

Inspired by Ollama, I designed PyOMlX to have the same / similar user experience as one would get with Ollama. Simply opening the app, would start the server in the background that would serve the Apple MlX models over the network. There is also an indication of the app running in the system tray and an easy control to exit the app right within the system tray.

The idea of this app is currently very simply. i.e serve mlx models over the network to locally hosted PyOllaMx. However if you check the roadmap.md, I have ambitious plans to mimic Ollama as much as possible, including the advent of supporting OpenAI chat completions endpoints.

Once again, MacOS DMGs are available in Releases and don’t forget ⭐️ this repo to show your support and make my day!

Putting all together : Showtime 🎥 🎬 🍿

I also publish a newsletter where I share my techo adventures in the intersection of Telecom, AI/ML, SW Engineering and Distributed systems. If you like getting my post delivered directly to your inbox whenever I publish, then consider subscribing to my substack.

I pinky promise 🤙🏻 . I won’t sell your emails!