---

title: LPW Insights Architecture

---

%%{

init : {

'flowchart' : {'useMaxWidth' : true,

'nodeSpacing' : 50,

'rankSpacing' : 150

}

}

}%%

graph LR

A["User"]

B["LPW Insights ✨"]

C["Crew AI 🤖"]

D["🦈 PyShark"]

A -- 1. Get PCAP file --> B

B -- 2. Extract PCAP Text Data --> D

D -- 3. Return PCAP Text Data --> B

B -- 4. Send Task + PCAP Text Data --> C

C -- 5. Kick off Crew to produce output --> C

C -- 6. Return Agent Response --> B

B -- 7. Present back to User --> A

LPW Insights | Agentic LPW | Project Updates

Few months before, I launched a hobby project called Local Packet Whisperer to explore on the possibility of how LLMs can be used in Wireshark pcap/pcapng analysis.

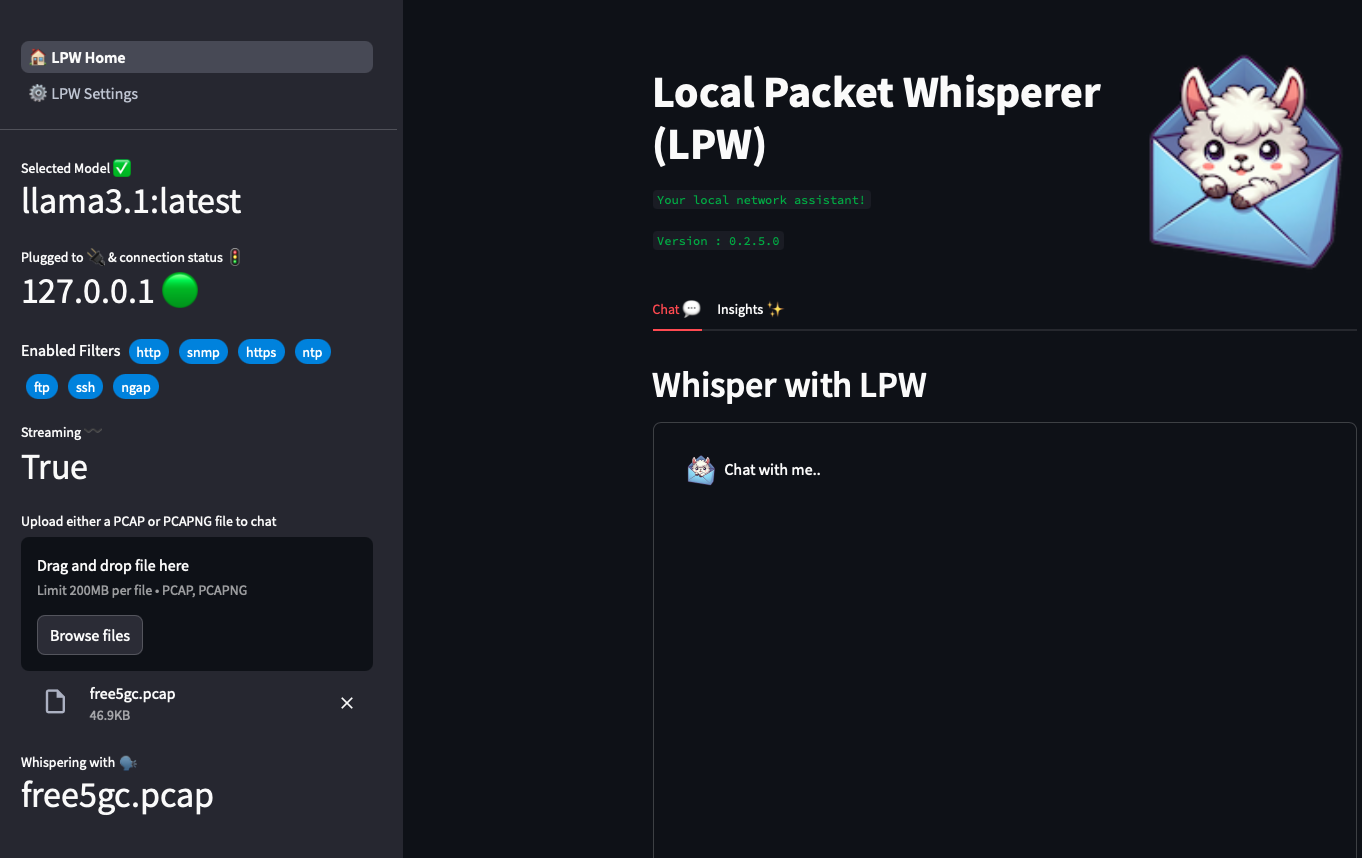

For the non starters, Local Packet Whisperer (LPW) is an innovative project designed to facilitate local and private interactions with PCAP/PCAG NG files using a combination of Ollama, Streamlit, and PyShark. This tool serves as a 100% local assistant powered by customizable local large language models (LLMs), with features including Streamlit for the front end and PyShark for packet parsing, LPW is easily installable via pip, allowing users to seamlessly connect to an Ollama server over a network.

What started as a hobby project, is now having 59 s and 21 s (as of published date)

Since my last update (March 2024🗓️), LPW grew to v0.2.5.0 which is the 8th release since its inception. In this journey, several updates were to LPW, including but not limited to :

✅ Dedicated Settings ⚙️ page to host all of LPW’s settings. As the functionality grew, having a dedicated settings page soon became a necessity

✅ Modern Widget views in the Sidebar , showing important information like LLM Server Info, Connection Status 🚦, Selected LLM Model 🧠, Streaming Info 🌀 etc

✅ In addition to connecting to Ollama in localhost, LPW can also be connected to Ollama servers on the network. You can also override the Ollama port (default: 11434) if needed.

and as a latest update, a new experimental feature called LPW Insights ✨ is also added. In the following section, I will explain more on this.

Experimenting with LLM Agents

Ever since I got introduced to Agentic frameworks like Crew AI, Langgraph, PhiData etc, I wondered what could be an agent usecase for LPW. Thus, LPW Insights ✨ was born!

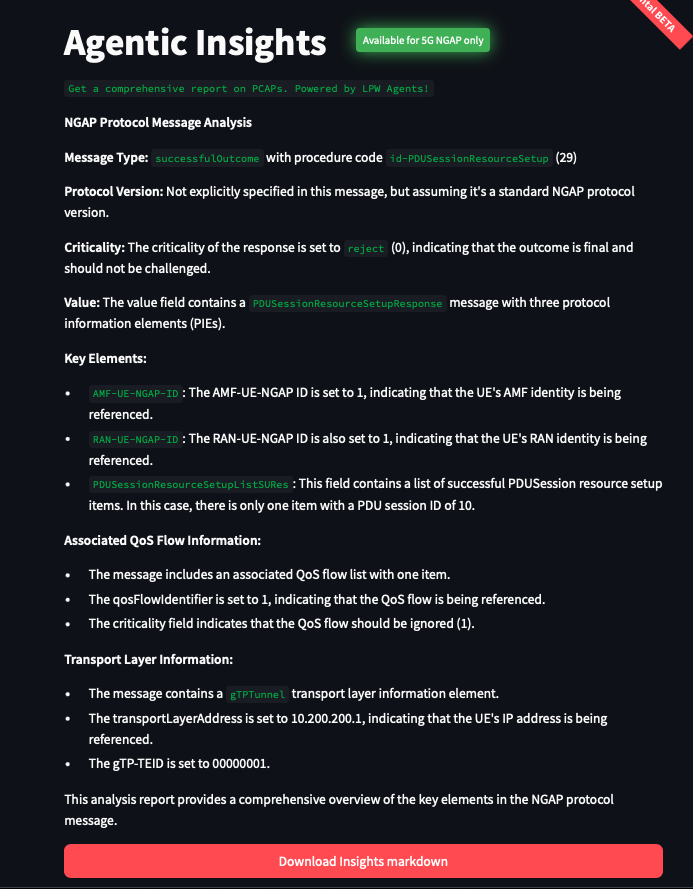

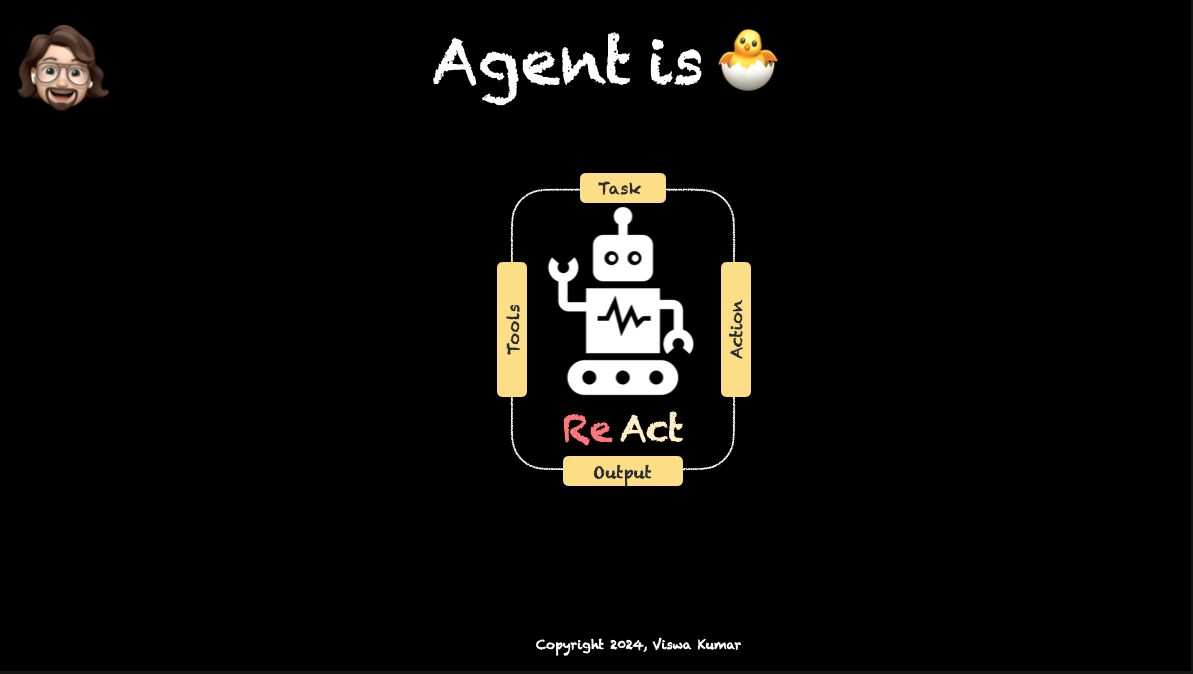

As a humble starter, LPW Insights will utilize an experimental NGAP Specialist Agent that takes the text output of the PCAP file and output a comprehensive analysis report in markdown format.

But, what is an Agent ?

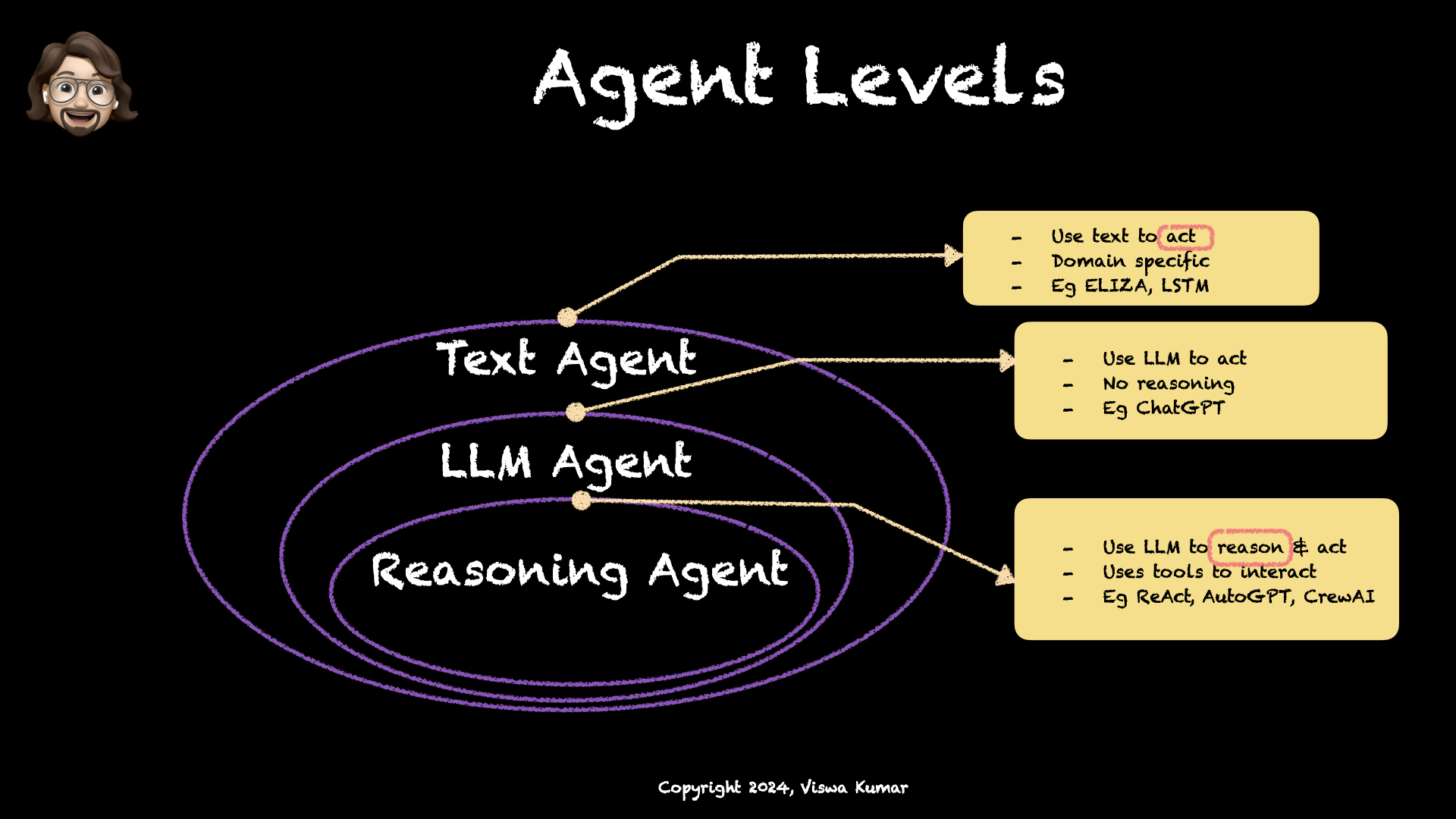

Succinctly put, a LLM Agent is simply an intelligent system that interacts with an environment. But this intelligence is subjective. Infact it evolved over the years as you can see in figure Figure 2. Broadly put, a Text Agent is known to act on given text without any context or accumulated learning. Then came the LLM Agent which still performed acting but with context based on its learning stage. The next evolution on this path is the Reasoning Agent which uses LLM to reason and act together.

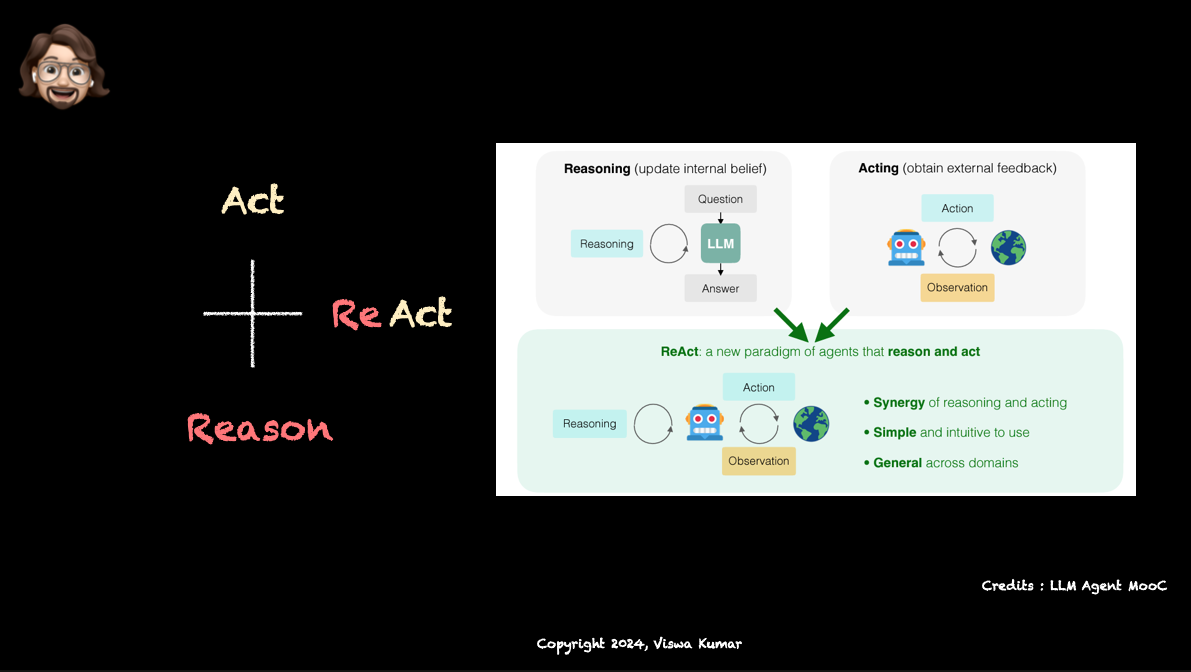

This combination of Reasoning and Acting is what being dubbed as ReAct.

But then, what is ReAct ?

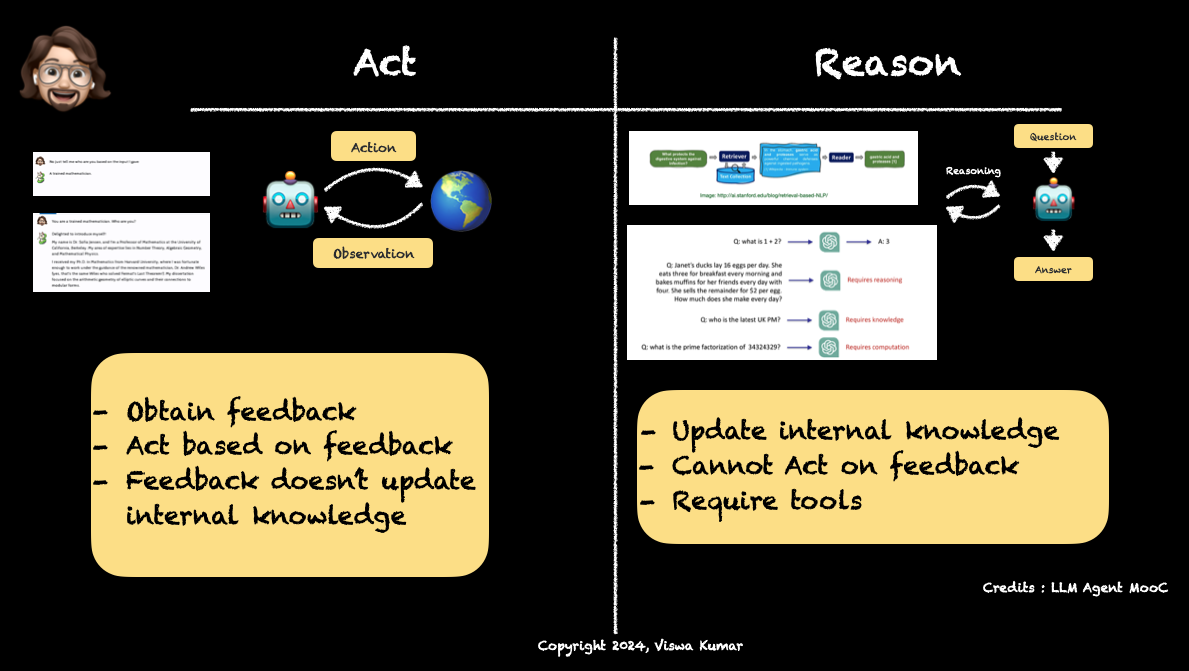

To understand more on ReAct, we should first understand the difference between Act and Reason concept.

In Act framework (Figure 3 (a)), the agent (LLM) simply observe the instruction (feedback) and act on the same. This feedback doesn’t change the agent’s internal knowledge-base. Whereas in Reasoning framework (Figure 3 (b)), the agent (LLM) updates it internal knowledge-base and is more a Q&A bot rather than a general purpose LLM capable of following instructions.

Now what if we combine these two, where we provide the capability for the agent to access external tools and space to follow the instructions (act) plus the provision to update its internal knowledge base in the form of self prompting techniques? We would get the best of both worlds. This forms the crux of ReAct Framework (Figure 3 (c)).

LPW Insights using Agents powered by CrewAI

Great! Now we have a basic understanding of ReAct Agents, I will now talk about how did I bring LPW Insights into LPW Project. For this usecase, I explore & used Crew AI.

Crew AI is a pretty beginner friendly, python first project and hence it naturally fit into the LPW project. The learning curve is pretty small and the documentation is pretty useful to get started. Although I have warn about the stability & community support. Since agentic LLM is a nascent field and crew ai actively evolving (they pushing bi-weekly releases 😱) the road to delivery is not a peaceful one! 😂. Plus they have a weird 🙃🙃 github issues policy where any bug that is not assigned to someone for 5 days gets auto closed. So obviously there are rough edges but nevertheless I found Crew AI to be pretty much useable.

Okay now back to LPW Insights. Following diagram shows how crew AI is used to put things together. At a highlevel, PyShark converts the incoming PCAP into to a textual representation. With this as input, a 5G NGAP specialist agent is constructed with a task of producing comprehensive protocol analysis report in markdown format. The agent’s output is then collected and presented back in the streamlit front end.

What’s next? & Resources!

Well, the next stage in my experimentation is to evolve to Multi-Agent Framework. Instead of just relying on a single agent, what if we throw agents for each protocol and for each speciality like network topology, standards compliance, sequence call flow creation, anomaly detection etc. Plus what if we hook up internet to these agents where they can query additional context from the web? Sounds fun even to just imagine it.

While I continue my wildest imaginations, If you are curious about this project, you can find the complete guide to install & use LPW here. Furthermore, your comments, issues & PRs are always welcome. Also don’t forget to ⭐️ the repo! 🤗

I also publish a newsletter where I share my techo adventures in the intersection of Telecom, AI/ML, SW Engineering and Distributed systems. If you like getting my post delivered directly to your inbox whenever I publish, then consider subscribing to my substack.

I pinky promise 🤙🏻 . I won’t sell your emails!